Low-Level API

Overview of the Low-Level API

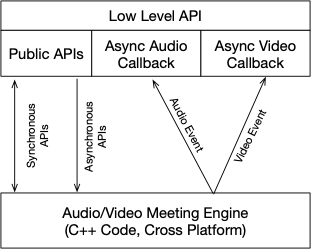

The low-level API is a thin layer that rests on top of the audio/video engine providing a platform appropriate interface to its functionality.

There are three main components to this low-level interface:

- A set of public APIs that are accessible in a platform appropriate manner

- Java APIs for Android

- Objective-C/Swift APIs for iOS and MacOS

- C# APIs for Windows

- An asynchronous "Callback" function that will be invoked whenever there is an audio event that needs to be reported back through the SDK

- An asynchronous "Callback" function that will be invoked whenever there is a video event that needs to be reported back through the SDK

The public APIs come in two types:

- Synchronous APIs that return a result immediately

- Asynchronous APIs whose results are communicated back through one or more audio/video events

When using the low level API you are committing to working with low-level audio and video events specified in XML format. While a number of the API calls available are "convenience" APIs that allows you to specify parameters as arguments instead of XML strings, the majority of communication with the underlying audio/video engine involves XML formatted messages.

Through the use of the low-level API, you will be able to initialize and join a meeting as well as enable the ability to send your audio and video into the meeting as a participant. In order to receive data back from the audio/video engine, you will need to register a separate audio and video callback function (generally, a C-style function that takes two string parameters as arguments). When audio and video events occur that you need to be aware of, the engine will call the appropriate audio or video callback function with an XML string containing event data. It is your responsibility to parse this data and create any appropriate data structures needed for persisting it in your application.

The low-level API also requires you to be prepared to deal with raw video frames for every video stream present in the meeting. A meeting can have a large number of participants, and each participant could, theoretically, have multiple video streams that they are sending into the meeting. Each video stream has a unique video stream ID.

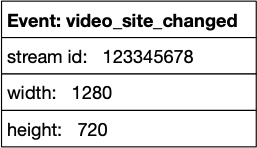

An example of dealing with one video stream follows. After initializing and joining a meeting, your application begins to receive video messages on the asynchronous video callback function that you registered with the low-level API. All such events are formatted in XML. When a participant's video stream becomes available as a result of you joining a meeting, you will receive events such as the these (a subset of the actual data is represented graphically instead of in XML for clarity):

This event tells you that there is a new video stream available that will be 1280 pixels wide by 720 tall. This stream will be represented by ID 123345678. In order to begin receiving video frames for this video stream, you will need to enable it (using a low-level API call). Once you enable the video stream, your application will receive another XML event:

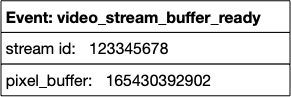

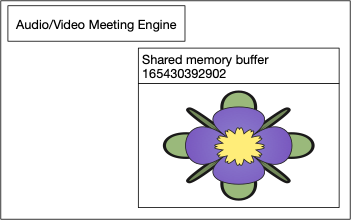

This event lets you know that the video stream with ID 123345678 is now active and any new frames available will be at memory location 165430392902. The memory location is created by the Audio/Video engine and will always contain the last frame received from the meeting:

The low-level API provides an API call that allows you to query for the availability of a new frame in the shared memory buffer (pixel buffer). This API call, named videoFrameReady, takes a stream id as a parameter and returns a Boolean indicating whether or not a new frame is ready to be retrieved. If true is returned, your application will need to access the memory at the pixel buffer associated with the stream id (in our example, 165430392902) and convert it from its raw format (usually an RGB888 formatted image) into something that can be displayed on the particular platform your application runs on.

It is common practice to put the call to videoFrameReady in a task that repeats multiple times per second. For example, if your application wishes to display video at 30 frames per second, you will need to call videoFrameReadyat least 30 times per second to make sure you can receive frames as fast as you'd like to display them!

This gives an example of what it is like to interact with the low-level API in the Meeting SDK. Consult the low-level Meeting SDK reference for a full description of all API calls and best practices when using this interface!

Updated 5 months ago